Add a Voice Assistant to Your React Native App

Build voice-powered mobile experiences with VibeFast. Real-time conversations, natural-sounding AI voices, and a beautiful conversation UI—all ready for iOS and Android.

Complete Voice Stack

Everything you need for voice-powered apps, from audio capture to AI responses.

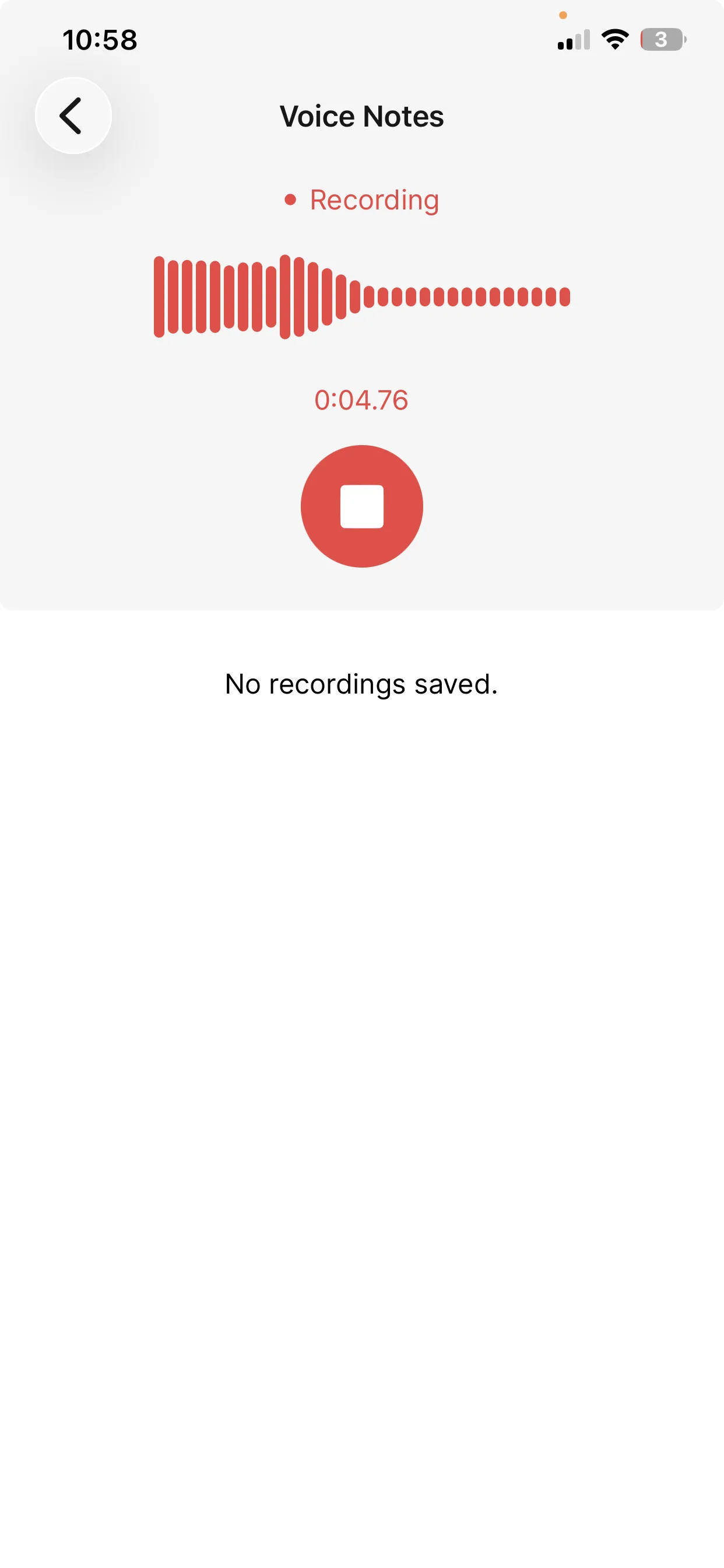

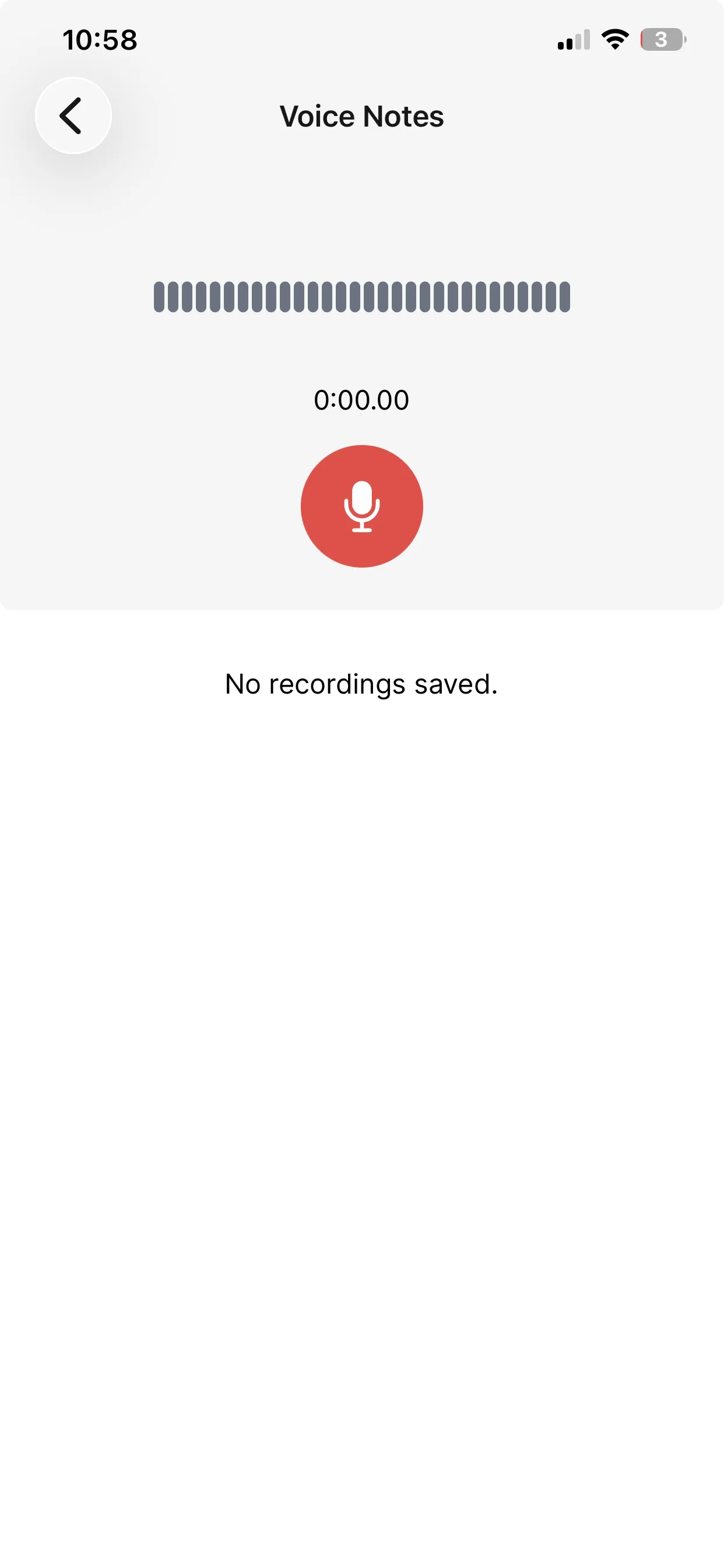

Real-Time Voice Input

Live voice capture with WebRTC, automatic speech detection, and silence handling.

AI Voice Responses

ElevenLabs voices for natural-sounding responses. Multiple voice options available.

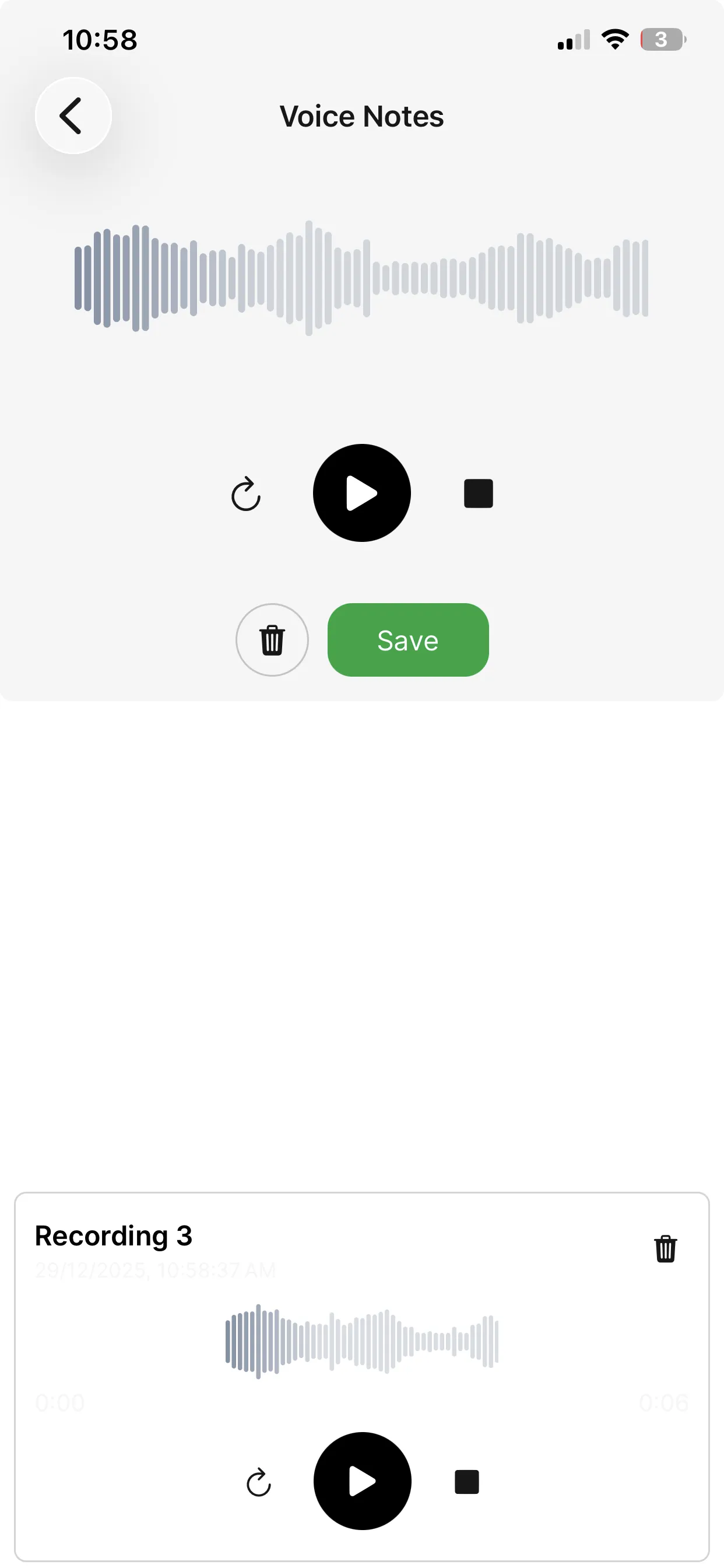

Conversation UI

Beautiful conversation screen with status indicators, controls, and message history.

LiveKit Integration

Real-time audio streaming with LiveKit. Low latency, reliable connections.

Configurable Agents

Create different AI personas and agents with custom prompts and behaviors.

Secure Token Flow

Server-side token generation keeps your API keys safe. Backend integration included.

What's Included

Simple Integration

Start a voice conversation with a few lines of code.

import { useState } from 'react';

import { useVoiceBot } from "@/features/voice-bot/services/use-voice-bot";

export default function VoiceAssistantScreen() {

const [messages, setMessages] = useState([]);

const { actions, state } = useVoiceBot({

agentId: process.env.EXPO_PUBLIC_ELEVENLABS_AGENT_ID!,

}, {

onMessage: (msg) => setMessages(prev => [...prev, msg]),

onError: (err) => console.error(err),

});

return (

<View className="flex-1 items-center justify-center">

<VoiceStatus status={state.status} />

<MessageList messages={messages} />

{state.status === 'connected' ? (

<Button onPress={actions.endConversation}>

Stop Speaking

</Button>

) : (

<Button onPress={actions.startConversation}>

Start Conversation

</Button>

)}

</View>

);

}Save Weeks of Development

| Feature | VibeFast | From Scratch |

|---|---|---|

| ElevenLabs integration | 2-3 days | |

| LiveKit WebRTC setup | 3-5 days | |

| Conversation UI | 2-3 days | |

| Token authentication | 1 day | |

| Native module config | 1-2 days | |

| Error handling | 1 day | |

| State management | 1 day | |

| Total Time | Ready today | 2-3 weeks |

Frequently Asked Questions

What voice providers does it support?

VibeFast uses ElevenLabs for voice synthesis and LiveKit for real-time audio streaming. ElevenLabs provides natural-sounding AI voices, and LiveKit handles the WebRTC connection for low-latency audio. You can customize the ElevenLabs agent ID to use different voices and behaviors.

Does it work offline?

The voice assistant requires an internet connection for real-time AI conversations. However, the UI components handle offline states gracefully with appropriate messaging. For offline voice recognition, you'd need to integrate a local speech-to-text solution.

How do I set up the backend?

VibeFast includes backend functions (Convex or Supabase) that generate secure tokens for ElevenLabs/LiveKit sessions. You set your ElevenLabs API key in environment variables, and the mobile app requests tokens from your backend. This keeps your API keys secure.

Does it require native code?

Yes, the voice assistant uses LiveKit's Expo plugin which requires a development build (prebuild). VibeFast includes the necessary configuration for both iOS and Android. You can't use this feature with Expo Go, but prebuild is a one-time setup.

Can I use different AI models for the assistant?

The voice synthesis uses ElevenLabs, but you can customize the AI behavior through ElevenLabs agents. Create different agents with different prompts, personalities, and knowledge bases in the ElevenLabs dashboard, then reference them by agent ID in your app.

How is the voice quality?

ElevenLabs provides some of the most natural-sounding AI voices available. You can choose from multiple voice options and customize speaking style. The LiveKit integration ensures low-latency audio streaming for responsive conversations.

Ready to Add Voice to Your App?

Get VibeFast and launch a voice-powered app this month.

One-time purchase. Lifetime updates. Commercial license included.